Gemini 2.0 Launched – Key Features, Advancements, and the Future of AI

On February 5, 2025, Google DeepMind unveiled the Gemini 2.0 model family – a suite of AI systems engineered for the “agentic era,” where intelligent agents can reason, act, and interact with the world in increasingly human-like ways. Among these models, Gemini 2.0 Flash stands out as the workhorse: a powerful, low-latency model designed to power dynamic applications with multimodal reasoning and native tool integration. Other versions in the family include the Experimental 2.0 Pro (optimized for complex coding and prompt handling), Experimental 2.0 Flash Thinking (which reveals its internal reasoning for improved explainability), and the cost-efficient Public Preview 2.0 Flash-Lite.

Table of Contents

Key Features and Capabilities Of Gemini 2.0 Models

Native Multimodal Support

Gemini 2.0 models come with integrated capabilities that set a new standard in AI functionality:

- Native Image Generation and Editing: Soon to be available, this feature will allow users to create or modify images directly.

- Native Text-to-Speech: Already available, it offers customizable speaking styles to match different moods or contexts.

- Tool Use: Agents can directly invoke tools like Google Search or execute code, making the AI not just reactive but also proactive in carrying out tasks.

Scalability and Token Capacity

Designed for extensive applications:

- Input Tokens: Supports up to 1 million tokens, enabling highly contextual conversations or analyses.

- Output Tokens: Can generate responses up to 8,000 tokens long, ideal for detailed content generation.

- Multimodal Inputs: Accepts text, image, video, and audio data, which makes it versatile for a variety of application domains.

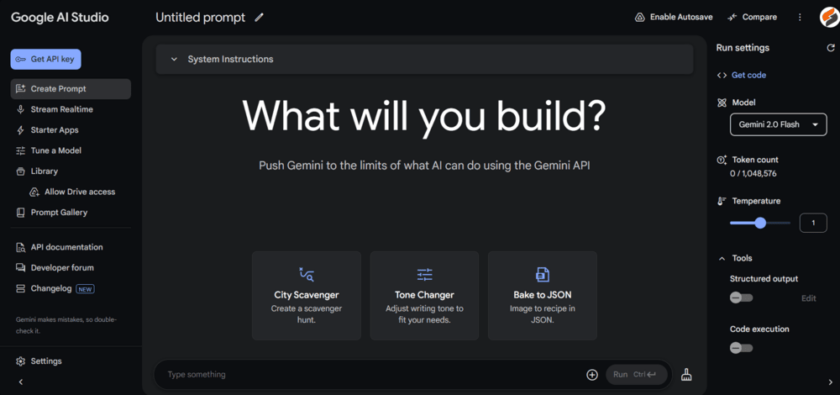

Integration Across Platforms

Gemini 2.0 Flash is available via:

- Google AI Studio

- Gemini API

- Vertex AI

- Gemini App

This ecosystem approach allows developers to integrate the model’s capabilities seamlessly into their products.

Real-World Applications and Developer Ecosystem

Pioneering Agentic Experiences

Gemini 2.0 Flash is at the core of the shift towards AI agents that are more proactive and context-aware. Use cases include:

- Coding Agents: Debugging, editing, and validating code through natural language commands.

- Gaming Assistants: Enhancing virtual worlds with interactive agents that can understand and react to player inputs.

- Multimodal Content Creation: Combining text, images, and audio to produce dynamic, engaging multimedia experiences.

Developer Showcases

Google has highlighted several projects that leverage Gemini 2.0’s capabilities:

- tldraw: A natural language interface for creative prototyping on an infinite canvas.

- Rooms: Enabling richer avatar interactions through advanced text and audio processing.

- Volly:

- Viggle and Toonsutra: Platforms that are exploring the boundaries of virtual characters and multilingual content translation.

These examples highlight how the Gemini 2.0 family is more than just a technical breakthrough—it’s a driving force for innovation across industries. To explore more developer showcases, including apps like Volley, Sublayer, Sourcegraph, and AgentOps, check them out here.

Gemini 2.0 Model Use Cases

| Model | Best For | Ideal Applications |

|---|---|---|

| Gemini 2.0 Flash | Real-time AI & speed | Chatbots, search engines, AI assistants, voice recognition |

| Gemini 2.0 Flash-Lite | Cost-effective AI | Customer support bots, lightweight AI assistants, automated content tagging |

| Gemini 2.0 Pro Experimental | Deep reasoning & coding | Advanced programming, AI-driven research, data analytics, high-end virtual assistants |

Benchmark Comparison: How Does Gemini 2.0 Perform?

Each model in the Gemini 2.0 family has been rigorously tested across multiple AI benchmarks. Here’s a breakdown of how they stack up:

| Benchmark | Gemini 2.0 Flash | Gemini 2.0 Flash-Lite | Gemini 2.0 Pro Experimental |

| General Knowledge (MMLU-Pro) | 77.6% | 70.2% | 79.1% |

| Code Generation (LiveCodeBench) | 34.5% | 30.2% | 36.0% |

| Reasoning (GPQA) | 60.1% | 55.8% | 64.7% |

| Math Skills (HiddenMath/MATH) | 90.9% | 85.3% | 92.5% |

| Multilingual Understanding (Global MMLU – Lite) | 83.4% | 78.1% | 86.2% |

| Long-context (MRCR M1) | 70.5% | 58.0% | 70.7% |

| Image (MMMU) | 71.7% | 68.0% | 72.7% |

| Audio (CoVoST2 21 Lang) | 39% | 38.4% | 40.6% |

| Video (EgoScheme (test)) | 71.1% | 67.2% | 71.9% |

These results highlight the strengths of each model. Flash leads in speed and efficiency, Flash-Lite is a balanced solution for cost-conscious users, and Pro Experimental offers the most advanced AI capabilities available today.

Stay Updated with the Latest news by Joining our Telegram and WhatsApp Channels.

Conclusion

Gemini 2.0 is a groundbreaking advancement in artificial intelligence, providing fast, efficient, and scalable solutions for a variety of applications. Whether you’re looking for a cost-effective AI model, a real-time AI assistant, or a high-performance deep reasoning system, the Gemini 2.0 family has an option for you. As AI technology continues to evolve, Gemini 2.0 is poised to lead the way, setting new standards for performance and usability. For more Information you can visit Official Site.

FAQs

Flash is optimized for speed and efficiency, while Pro Experimental offers deeper reasoning, advanced coding capabilities, and a larger context window for complex tasks.

Yes! Gemini 2.0 Pro Experimental, in particular, excels in code generation and problem-solving, outperforming previous AI models in this area.

Yes, developers and enterprises can integrate Gemini 2.0 into their applications through Google AI Studio and API services.

Gemini 2.0 models offer improved speed, larger context windows, better reasoning abilities, and enhanced multimodal support compared to the Gemini 1.5 series.

You can explore and integrate Gemini 2.0 through Google AI Studio and related APIs.

You May Also Like

- OpenAI’s First-Ever Rebrand – A Fresh Look for the Future of AI

- What is an AI Agent? The Future of Autonomous Intelligence Explained

- OpenAI Launched ChatGPT o3-mini – A Reasoning Model like DeepSeek R1

- Qwen 2.5-Max – The Next Evolution in AI Language Models

- What is Deepseek AI? Everything You Need to Know About DeepSeek R1 Model

- Janus Pro 7B by DeepSeek: Revolutionizing AI Image Generation with Advanced Features