Kimi K1.5 AI – A Revolutionary Leap in Multi-Modal Reinforcement Learning

Kimi K1.5 AI is an advanced multi-modal language model (MLLM) developed by Moonshot AI, an artificial intelligence (AI) company based in Beijing, China. It’s a powerful model designed to process both text and images, setting itself apart with the use of reinforcement learning (RL) to solve problems and make faster, smarter decisions.

The model competes with industry giants like OpenAI’s O1 and GPT-4o, offering impressive skills in math, coding, and visual understanding. With its ability to handle long context scenarios and optimize solutions, Kimi K1.5 is pushing the boundaries of AI.

Whether you want to dive into research, education, or automation, Kimi K1.5 has the potential to change the way we interact with technology. In this blog, we’ll explore what makes Kimi K1.5 so special, how it works, and how you can use its capabilities to get the most out of it.

Table of Contents

What is Kimi K1.5 AI?

Kimi K1.5 is a next-generation AI model designed to process and understand both text and images. Unlike traditional AI models that predict the next word in a sentence, Kimi K1.5 goes beyond that by leveraging reinforcement learning to improve and optimize responses continuously. Whether you need AI for complex reasoning, coding assistance, or vision-based tasks, this model delivers cutting-edge performance.

Key Features of Kimi K1.5 AI

Reinforcement Learning for Enhanced AI Training

Traditional LLMs are trained using predefined datasets, but Kimi K1.5 takes a different approach by using reinforcement learning to explore and improve responses dynamically. As a result, the model generates higher-quality reasoning and can adapt to new challenges without relying solely on static data.

Long Context Scaling (128K Token Context Window)

One of the standout features of Kimi K1.5 is its 128K token context length, which significantly enhances its ability to handle long-form content, complex multi-step reasoning, and detailed problem-solving tasks.

Multi-Modal Capabilities (Text + Vision)

Kimi K1.5 is trained on both text and visual data, which allows it to perform tasks that require understanding both images and text. As a result, it is well-suited for applications like answering questions based on images, solving mathematical problems with diagrams, and processing documents that contain visual elements.

Simplified RL Framework

Unlike many other models that use reinforcement learning, Kimi K1.5 doesn’t rely on complex methods like Monte Carlo tree search or reward-based models. Instead, it adopts a simpler and more efficient RL approach, balancing computational cost with accuracy.

Superior Short-CoT (Chain of Thought) Performance

Kimi K1.5 introduces a method called Long2Short, which allows it to compress long-chain reasoning into more efficient short-chain reasoning, outperforming GPT-4o and Claude 3.5 Sonnet by up to 550% in some tasks.

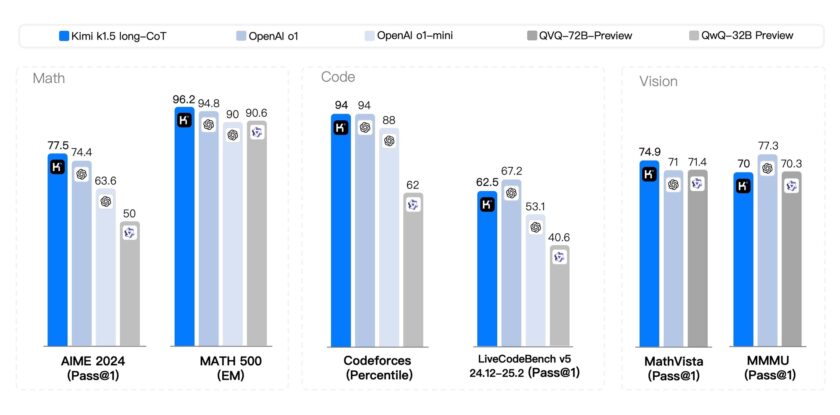

Performance Benchmarks

Kimi K1.5 has been evaluated across multiple industry-standard benchmarks, achieving state-of-the-art results in various domains:

| Benchmark | Kimi K1.5 Score | OpenAI O1 | GPT-4o | Claude 3.5 Sonnet |

| Math (MATH-500) | 96.2 | 94.8 | 90.6 | 90.2 |

| Codeforces (Competitive Coding Percentile) | 94 | 94 | 62 | 53.1 |

| LiveCodeBench (Code Reasoning) | 62.5 | 67.2 | 40.6 | 36.3 |

| MathVista (Vision Reasoning) | 74.9 | 71.0 | 70.3 | 66.4 |

| AIME (Mathematical Olympiad) | 77.5 | 74.4 | 50.0 | 39.2 |

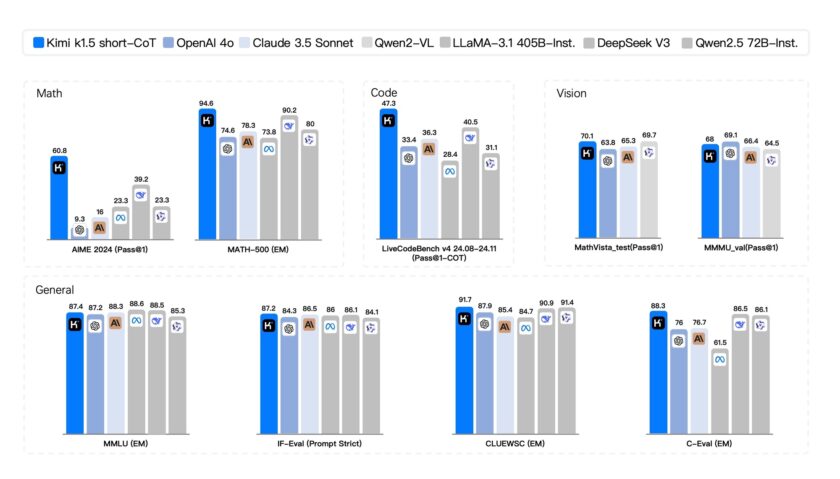

| Category | Kimi k1.5 short-CoT | OpenAI 4.0 | Claude 3.5 Sonnet | LLAMA-3 405B-Inst | Qwen 2.5 72B-Inst |

|---|---|---|---|---|---|

| Math (AIME 2024) | 60.8 | 9.3 | 16 | 23.3 | 39.2 |

| Math (MATH-500) | 94.6 | 74.6 | 78.3 | 90.2 | 73.8 |

| Code (LiveCodeBench) | 47.3 | 33.4 | 36.3 | 40.5 | 28.4 |

| Vision (MathVista test) | 70.1 | 63.8 | 65.3 | 80 | 69.7 |

| Vision (MMMU_val) | 68 | 69.1 | 66.4 | 64.5 | 64.5 |

| General (MMLU) | 87.4 | 87.2 | 88.3 | 85.3 | 86.5 |

| General (IF-Eval) | 87.2 | 84.3 | 86.5 | 84.1 | 86.1 |

| General (CLUEWSC) | 91.7 | 87.9 | 85.4 | 91.4 | 84.7 |

| General (C-Eval) | 88.3 | 76 | 76.7 | 86.1 | 61.5 |

How Kimi K1.5 Works?

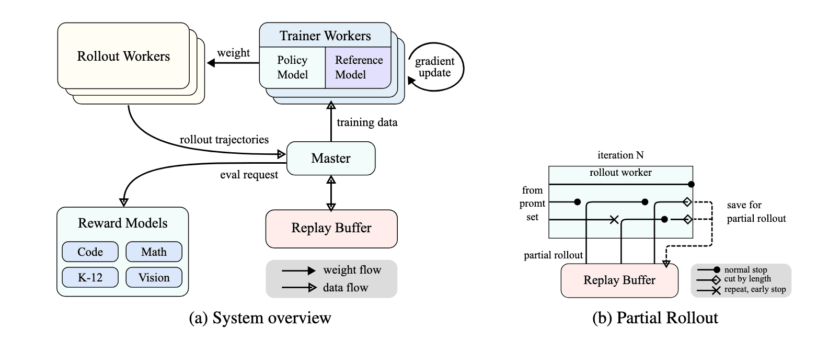

1. Reinforcement Learning Approach

- Data Expansion: Uses reinforcement learning to generate new training data dynamically, rather than relying only on static datasets.

- Policy Optimization: Implements an advanced mirror descent optimization technique to improve reasoning efficiency.

- Contextual Memory: Uses a partial rollout technique to manage long CoT (Chain-of-Thought) sequences efficiently.

2. Multi-Modal Training

- Text Processing: Trained on natural language, coding, and reasoning datasets.

- Vision Understanding: Incorporates image-based learning for math, science, and object recognition tasks.

- Hybrid Learning: Combines real-world and synthetic vision data to improve contextual understanding.

3. Efficient Training Strategy

- Hybrid Training Infrastructure: Uses Kubernetes-based deployment for scalability and performance optimization.

- Adaptive Sampling Techniques: Implements curriculum learning and prioritized sampling to maximize learning efficiency.

- Length Penalty Control: Reduces overthinking by penalizing unnecessarily long responses while maintaining high accuracy.

Real-World Applications of Kimi K1.5

Kimi K1.5 is designed to be a multi-purpose AI model, with applications across various industries:

1. AI-Powered Coding Assistance

- Outperforms GPT-4o in code completion and debugging.

- Excels in competitive programming challenges (LiveCodeBench, Codeforces).

2. Mathematical Problem Solving

- Solves complex algebra, geometry, and calculus problems with step-by-step reasoning.

- Achieves top-tier scores in AIME and MATH-500.

3. AI in Education

- Assists students with STEM learning, homework, and exam preparation.

- Offers step-by-step explanations for better knowledge retention.

4. Vision-Based AI Applications

- Interprets scientific graphs, charts, and visual data.

- Enhances image-based question-answering capabilities.

5. Business and Research Automation

- Improves document analysis, summarization, and data extraction.

- Automates research tasks requiring multi-modal reasoning.

How to Use Kimi K1.5 AI?

Using Kimi K1.5 is simple and user-friendly. Here’s how you can get started:

1. Access the Model

- Visit the official Kimi K1.5 GitHub repository for model downloads and API documentation.

- You can interact with Kimi AI through the web-based interface (Click Here) without needing any installation. Kimi can access the internet and supports file uploads (up to 50 files, 100 MB each). It accepts various file formats including PDF, DOC, XLSX, PPT, TXT, images, and more.

- Additionally, Kimi offers both a desktop app and a browser extension, both of which are free to use for interacting with the AI. Before you start, simply register and log in to begin.

2. Integration with Applications

- Developers can integrate Kimi K1.5 into applications via API endpoints.

- It supports various programming languages like Python, JavaScript, and more.

3. Fine-Tuning & Customization

- Users can fine-tune the model on specific datasets for customized outputs.

- Reinforcement learning settings allow advanced users to modify training parameters.

4. Deploying for Different Use Cases

- For content generation: Use Kimi K1.5 to create blogs, summaries, and creative writing.

- For coding assistance: Integrate with coding platforms for debugging and auto-completion.

- For education: Implement AI-powered tutoring systems for math and science.

Stay Updated with the Latest news by Joining our Telegram and WhatsApp Channels.

Conclusion

Kimi K1.5 represents a major leap forward in AI technology, bringing together reinforcement learning, long-context scaling, and multi-modal capabilities into a single powerful model. By outperforming top-tier AI models in coding, math, and reasoning tasks, it paves the way for smarter, more adaptive AI applications. As AI continues to evolve, models like Kimi K1.5 will play a crucial role in education, research, automation, and beyond. Whether you’re an AI enthusiast, developer, or researcher, Kimi K1.5 is definitely a model to watch in the ever-expanding world of artificial intelligence.

FAQs

Kimi K1.5 uses reinforcement learning, long context scaling, and multi-modal capabilities, making it superior in reasoning and adaptability compared to traditional LLMs.

Yes, Kimi K1.5 is available on GitHub, allowing users to download, modify, and integrate it into their applications.

Yes, Kimi K1.5 is a multi-modal model capable of processing both text and vision data for tasks like image recognition, visual question answering, and data extraction.

Kimi K1.5 requires high-performance GPUs for optimal execution. Cloud-based services may also offer hosted versions for easier access.

Kimi K1.5 outperforms GPT-4o in short-CoT reasoning tasks and competitive programming, thanks to its reinforcement learning and long-context memory enhancements.

Yes, developers can fine-tune Kimi K1.5 using their own datasets to optimize performance for specialized applications.

You May Also Like